Computer Vision for OSINT #

VFRAME develops and deploys computer vision technologies for analyzing conflict zone media using neural networks powered by synthetic data. VFRAME is now partnered with the NGO Tech 4 Tracing.

Featured Research #

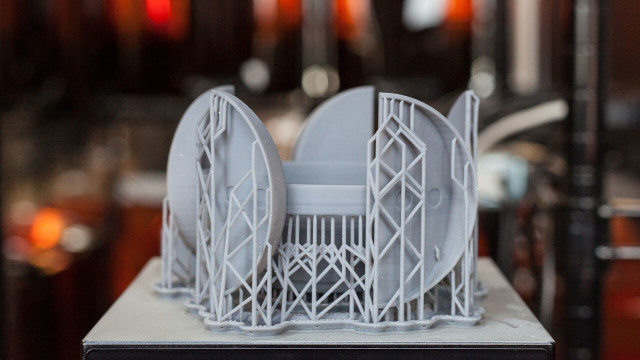

- 9N235/9N210 Submunition Object Detector

- > Building a 9N235/9N210 submunition object detector with photography, photogrammetry, 3D-rendering, 3D-printing, and convolutional neural networks

News #

- 2025: VFRAME founder Adam Harvey joins Tech 4 Tracing as Chief Technologist to implement and scale up computer vision technologies

- Nov 16, 2023: VFRAME + Tech4Tracing.org present latest technical developments at the GICHD Innovation Conference

- 2022: VFRAME partners with Tech4Tracing to build mine detection technology with support from EU

Publications #

- June 2023: Peer-reviewed research paper published in Journal of Conventional Weapons Destruction on the 9N235 object detector development (open access)

Press #

- Financial Times: Researchers train AI on ‘synthetic data’ to uncover Syrian war crimes

- Economist: AI helps scour video archives for evidence of human-rights abuses

- Der Spiegel: How Artificial Intelligence Helps Solve War Crimes

- Wall Street Journal: AI Emerges as Crucial Tool for Groups Seeking Justice for Syria War Crimes

- MIT Technology Review: AI Could Help Human Rights Activists Prove War Crimes

Project Development #

About VFRAME #

Human rights researchers often rely on videos shared online to document war crimes, atrocities, and human rights violations. Manually reviewing these videos is expensive, does not scale, and can cause vicarious trauma. As an increasing number of videos are posted, a new approach is needed to understand these large datasets.

Since 2017 VFRAME has been working with Mnemonic.org, a Berlin-based organization dedicated to documenting war crimes and human rights violations, to develop computer vision tools to address these challenges.

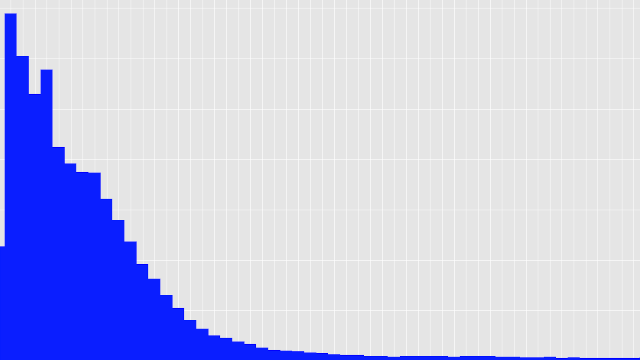

Specifically, VFRAME has been developing a scalable computer vision system for large-scale analysis of video archives to detect illegal munitions, and redaction tools to blur faces. VFRAME can detect objects, such as the RBK-250 munition found in videos from the Syrian conflict or the 9N235 submunition documented in Ukraine, with up to 99% accuracy at over 400 FPS on a single workstation. The vocabulary of objects is growing and will soon include the AO-2.5RT, Uragan/Smerch rockets, and the RBK-500.

Core Technologies #

VFRAME creates high-fidelity synthetic data to develop custom object detection, semantic segmentation, and image classification technologies for high-risk objects including cluster munitions and anti-personnel mines. Read about our past and current research in the press: