VFRAME researches and develops state-of-the-art computer vision technologies for human rights research and conflict zone monitoring #

Update 2024: VFRAME is proud to partner with Tech4Tracing.org for future developments of this project. Tech4Tracing is a NGO based in Europe and the United States that works on arms control and deployment of artifical intelligence for humanitarian applications.

VFRAME softare and experiments are developed and maintained by Adam Harvey in Berlin with additional contributions from Josh Evans and a growing list of collaborators.

During 2017-2021 VFRAME collaboratd with Mnemonic.org, an organization dedicated to helping human rights defenders effectively use digital documentation of human rights violations for justice and accountability, to pilot a project using computer vision to search for munitions in millions of videos. The results, completed in 2022, will soon be made publicly available in early 2025.

VFRAME’s early command line interface (CLI) image processing software and detection models are open-source with MIT licenses and available at github.com/vframeio. Additional tools are under development for open-source release.

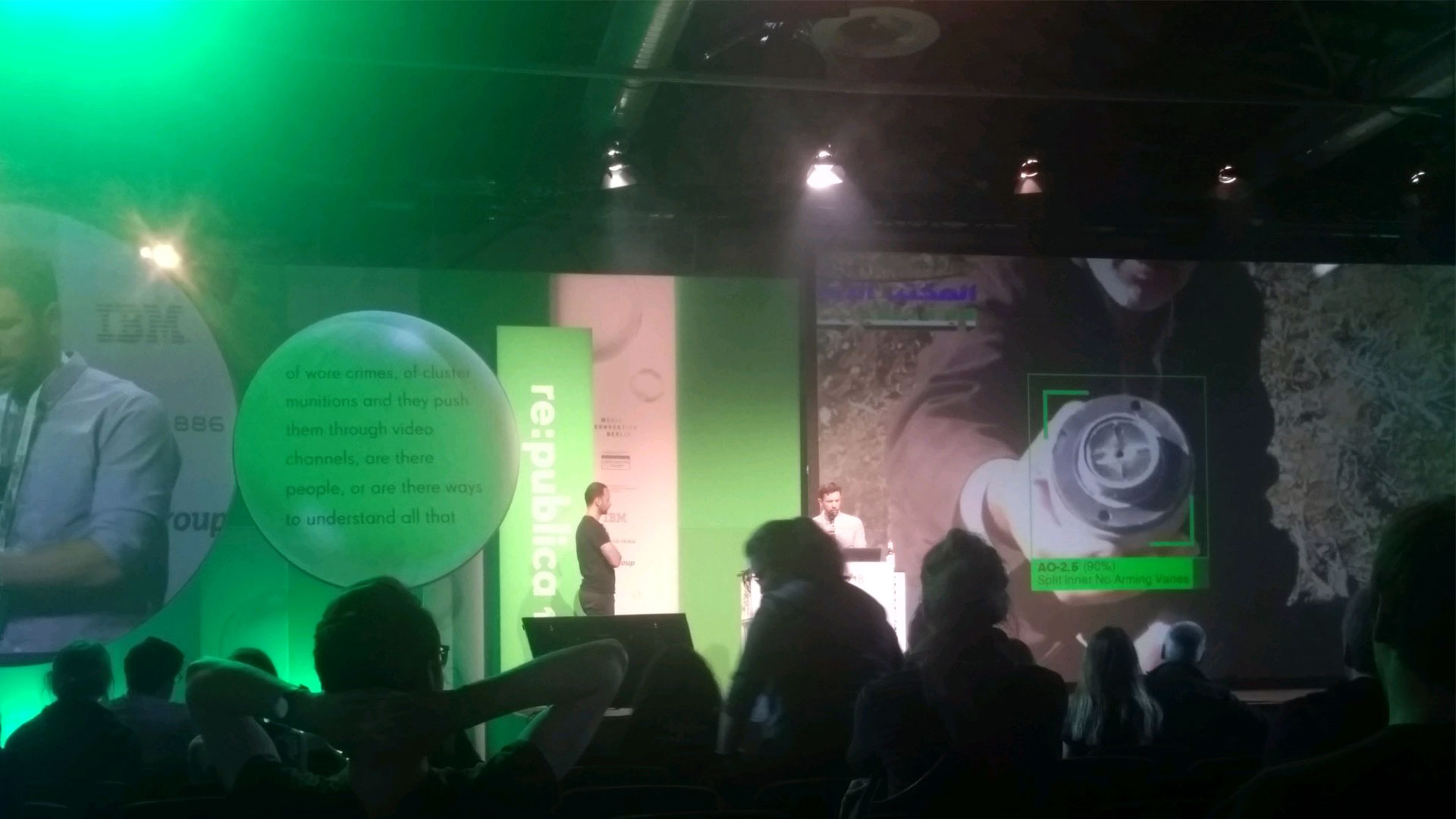

2018: Showing an early prototype of the AO-2.5RT object detector at republica:18 in Berlin with Hadi Al-Khatib from Syrian Archive

2022: Showing a functional AO-2.5RT object detector at the United Nations 8th Biennial Meeting of States on Small Arms and Light Weapons in partnership with Tech 4 Tracing.

Recent News and Events #

- 2023-2025: VFRAME engages in a multi-year partnership with Tech4Tracing.org with support from the European Union

- August 2022: VFRAME announces partnership with Tech 4 Tracing to access munitions for photogrammetry scanning and benchmark dataset captures

- June 2022: VFRAME presents at United Nations Eighth Biennial Meeting of States on Small Arms and Light Weapons in NYC

- February 2022: New video shows how VFRAME uses 3D rendered synthetic image training data to build object detection algorithms for cluster munitions

- December 2021: VFRAME featured in the Financial Times: Researchers train AI on ‘synthetic data’ to uncover Syrian war crimes

- November 2021: VFRAME launches DFACE.app a privacy-focused web app to detect and blur faces in protest imagery. Code: github.com/vframeio/dface

Origins and Mission #

The idea for VFRAME grew out of discussions at a 2017 Data Investigation Camp organized by Tactical Technology Collective in Montenegro. Through meeting with investigative journalists, human rights researchers, and digital activists from around the world it became clear that computer vision was a much needed tool in this community yet the solutions were nowhere in sight.

Since then VFRAME’s mission has been to research, prototype, and deploy computer visions systems that accelerate the capabilities of human rights researchers and investigative journalists.

Early support for research and development of VFRAME has been supported by the ProtypeFund (DE), Swedish International Development Agency (SIDA), Meedan, and NL Net.

Development contributions thanks to Jules LaPlace for early development of web interfaces for visual search engine applications.

Contact #

- For inquiries about implementing computer vision, please contact Tech4Tracing.org

- For all other inquiries please contact research at vframe.io

- Tip: for fastest reply use secure email like ProtonMail (insecure emails that contain tracking links or tracking pixels may be automatically deleted)

Press Archive #

- Financial Times: Researchers train AI on ‘synthetic data’ to uncover Syrian war crimes

- Der Spiegel: How Artificial Intelligence Helps Solve War Crimes

- Wall Street Journal: AI emerges as Crucial Tool for Groups Seeking Justice for Syria War Crimes (paywall)

- MIT Technology Review: Human rights activists want to use AI to help prove war crimes in court

- Deutsche Welle: VFRAME and Syrian Archive discuss new technologies for analyzing conflict zone videos (Spanish) https://www.dw.com/es/enlaces-ventana-abierta-al-mundo-digital/av-48853416